Send AI Agents to Meetings

Use Recall.ai's Output Media API to create meeting bots that can listen and respond in real time

Output Media lets your app control what the bot outputs into a live meeting, for both audio and video. It’s the main API for building bots that “speak” and “show” content in the call.

With Output Media, the bot can play audio responses and update its video output with MP4s or GIFs, both with low latency in real-time. You can use it for interactive agents that react/respond in real time, or for simpler use cases like playing a specific audio clip or video at the moment you choose. The bot will output the content via the bot's camera feed or the bot's screenshare.

Some popular use cases for output media is to power AI sales agents, coaches, recruiters, interviewers, screenshare slides/videos, and more.

For example implementations and use cases, see our demo repos:

Platform Support

| Platform | Bot Configuration ( output_media ) |

|---|---|

| Zoom | ✅ |

| Google Meet | ✅ |

| Microsoft Teams | ✅ |

| Cisco Webex | ✅ |

| Slack Huddles | ❌ |

Quickstart

How output media works

Output Media works by having the bot run a webpage you control and then stream that page’s audio and video into the meeting. The bot can present the webpage either as a screenshare or as its camera video, so whatever your page renders is what participants see and hear.

Why Use a Webpage?A webpage gives developers an easy and familiar interface to create real-time audio and visual responses: you can update charts, render an avatar, or play synthesized speech, all using standard HTML/CSS/JavaScript.

The bot will send your webpage the following data in real-time:

- A stream of audio data from the meeting

- The transcripts for the audio data (if configured)

Which you can then pass to third party speech-to-speech models or other LLMs to process/analyze. Then you can play audio/video on the webpage and the bot will stream it back into the meeting.

Starting Output Media automatically when bot joins via the Create Bot API

You can use the output_media configuration in the Create Bot endpoint to stream the audio and video contents of a webpage to your meeting. The bot can display the webpage either as a screen-share, or directly through its own camera.

output_media takes the following parameters:

kind: The type of media to stream (currently onlywebpageis supported)config: The webpage configuration (currently only supportsurl)

Let's look at an example call to the Create Bot endpoint:

// POST /api/v1/bot/

{

"meeting_url": "https://us02web.zoom.us/j/1234567890",

"bot_name": "Recall.ai Notetaker",

"output_media": {

"camera": {

"kind": "webpage",

"config": {

"url": "https://www.recall.ai"

}

}

}

}The example above tells Recall to create a bot that will continuously stream the contents of the Recall.ai homepage to the provided meeting URL.

Starting Output Media manually via the Start Output Media API

You can also choose to start streaming a webpage by calling the Output Media endpoint at any time while the bot is in a call.

The parameters for the request are the same as the output_media configuration.

curl --request POST \

--url https://us-west-2.recall.ai/api/v1/bot/{bot_id}/output_media/ \

--header 'Authorization: ${RECALL_API_KEY}' \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--data-raw '

{

"camera": {

"kind": "webpage",

"config": {

"url": "https://recall.ai"

}

}

}

'Stopping Output Media via the API

You can stop the bot's media output at any point while the bot is streaming media by calling the Stop Output Media endpoint.

curl --request DELETE \

--url https://us-west-2.recall.ai/api/v1/bot/{bot_id}/output_media/ \

--header 'Authorization: ${RECALL_API_KEY}' \

--header 'accept: application/json' \

--header 'content-type: application/json'

--data-raw '{ "camera": true }'The above will make the bot stop screensharing or outputting video from camera, essentially stopping output_media.

Making the bot interactive

So far you've seen how to stream any webpage to a meeting. But to build a real AI agent, you need a webpage that can listen to the information coming from meeting and respond dynamically. This means creating a webpage that can receive live meeting data and update in real time.

Setting up your development environment

For development, you'll want to be able to iterate quickly on your webpage and see changes reflected immediately in the bot. The easiest way to do this is:

- Run a local development server with your webpage. You can either create your own or clone one of our sample repos (Real-Time Translator, Voice Agent)

- Expose it publicly using a tunneling service like Ngrok

- Point your bot to the public tunnel URL

This lets you edit your code locally and instantly see the results in your meeting bot. Once everything is configured, here's what your Create Bot request should look like:

// POST /api/v1/bot/

{

"meeting_url": "https://us02web.zoom.us/j/1234567890",

"bot_name": "Recall.ai Notetaker",

"output_media": {

"camera": {

"kind": "webpage",

"config": {

// traffic to this URL will be forwarded to your localhost

"url": "https://my-static-domain.ngrok-free.app"

}

}

}

}Listening to meeting audio from the webpage

When your bot starts streaming your webpage, the webpage automatically gets access to the live audio from the meeting.

No User Interaction RequiredNormally, accessing microphone audio requires user permission and interaction (like a button click). However, the bot automatically grants microphone permissions, so your webpage will be able to access audio immediately without user prompts or click events.

You can access a MediaStream object and its audio track from the webpage running inside the bot. The following example shows how to get samples of the meeting audio in AudioData objects:

const mediaStream = await navigator.mediaDevices.getUserMedia({ audio: true });

const meetingAudioTrack = mediaStream.getAudioTracks()[0];

const trackProcessor = new MediaStreamTrackProcessor({ track: meetingAudioTrack });

const trackReader = trackProcessor.readable.getReader();

while (true) {

const { value, done } = await trackReader.read();

const audioData = value;

... // Do something with the audio data

}From here, you can process the audio however you need. For example, pipe it to OpenAI's Realtime API for speech-to-speech processing, then output the AI's audio response back to the meeting participants through your webpage's audio elements. This creates a fully interactive voice agent that can have natural conversations with meeting attendees.

Accessing real-time meeting data from the webpage

The bot exposes a websocket endpoint to retrieve real-time meeting data while the webpage is streaming audio and video to the call. Right now, only real-time transcripts are supported. You can connect to the real-time API from your webpage with the following example:

const ws = new WebSocket('wss://meeting-data.bot.recall.ai/api/v1/transcript');

ws.onmessage = (event) => {

const message = JSON.parse(event.data).transcript?.words?.map(l => l.text)?.join(' ');

// .. your logic to handle realtime transcripts

};

ws.onopen = () => {

console.log('Connected to WebSocket server');

};

ws.onclose = () => {

console.log('Disconnected from WebSocket server');

};The websocket messages coming from the /api/v1/transcript endpoint have the same shape as the data object in Real-time transcription .

Transcribing the bot's audio is currently not supported.

Debugging Your Webpage

Local development

When running your webpage locally, you will need to expose it through a public URL using a tunneling tool such as ngrok.

If your webpage is making API requests to another local server, you will also need to ensure that these are routed through a publicly exposed endpoint as well (using ngrok or similar). If you don't do this, your webpage may not function as expected since API calls to localhost endpoints are blocked within the bot's Output Media process.

Accessing Chrome Devtools

During the development process, you will need to debug issues with your Output Media bot's webpage. Recall provides an easy way to connect to the webpage's Chrome Devtools while the bot is running. Check the video demo below and read the following instructions to learn how to access your bot's Devtools.

- Send an output media bot to your meeting, and wait for its output media stream

- Log in to your Recall.ai dashboard

- Select Bot Explorer in the sidebar

- In the Bot Explorer app, search for your bot by ID

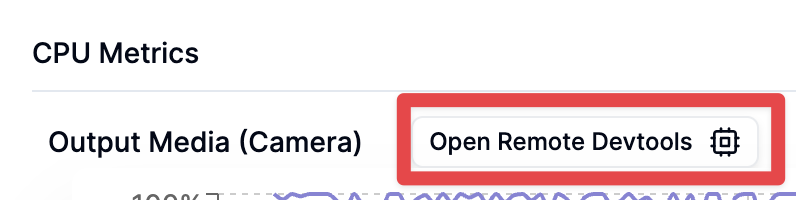

- Open the "Debug Data" tab for your bot. Then under CPU Metrics, click the "Open Remote Devtools" button. A devtools inspector connected to your live bot will open in a new tab.

This opens a full Chrome inspector connected to your bot's browser. You can inspect elements, check console logs, monitor network requests, and debug just like you would locally.

Bot must be aliveSince Output Media Devtools are exposed by the bot itself and CPU metrics are in real-time, they are only available when the bot is actively in a call.

Profiling CPU usage

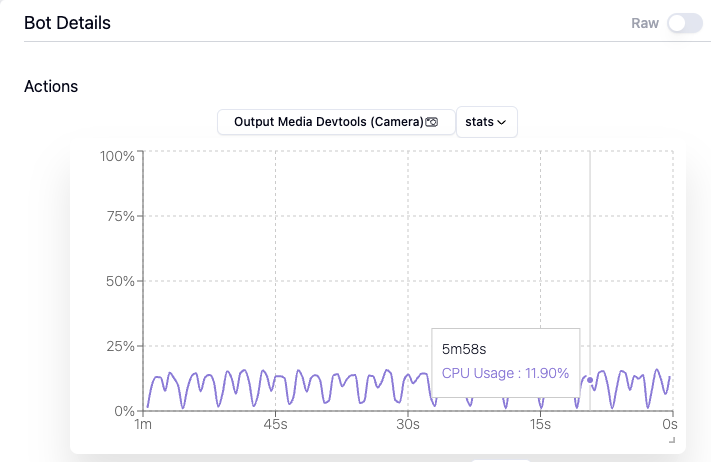

You can also view the CPU usage for an individual bot in the "Bot Details" section. You can use this graph to uncover any performance bottlenecks with your webpage which might be causing the webpage to lag or perform poorly.

Addressing audio and video issues: bot variants

While we expose CPU metrics to help you identify and address any performance issues on your end, sometimes this is out of your control and you just need more CPU power or hardware acceleration. Below is a breakdown of the compute resources available to the instance running your webpage:

| Variant | CPU | Memory | WebGL | Camera/Screenshare Resolution | Camera/Screenshare Framerate |

|---|---|---|---|---|---|

web (default) | 250 millicores | 750MB | ❌ Unsupported | 1280x720 px | 15 fps |

web_4_core | 2250 millicores | 5250MB | ❌ Unsupported | 1280x720 px | 15 fps |

web_gpu | 6000 millicores | 13250MB | ✅ Supported | 1280x720 px | 15 fps |

To use these configurations, you can specify the variant in your Create Bot request. For example, this is how you can specify that your bot should use the 4 core bot variety on all platforms:

{

...

"variant": {

"zoom": "web_4_core",

"google_meet": "web_4_core",

"microsoft_teams": "web_4_core"

}

}These bots run on larger machines, which can help address any CPU bottlenecks hindering the audio & video quality of your Output Media feature.

Limitations on video qualityUpgrading to a larger bot variant (e.g.,

web_4_coreorweb_gpu) can help if your bot is dropping frames or struggling to keep up with real-time processing (i.e. the bot is resource-constrained).However, it won't improve the source video quality: resolution, compression artifacts, and "fuzziness" are determined by the meeting platform itself and can't be changed by the bot. If your video looks pixelated on

web_4_core, it's likely the meeting platform is sending a lower-quality stream.

ImportantDue to the inherent cost of running larger machines, the prices for some variants are higher:

Variant Pay-as-you-go plan Monthly plans web_4_core$0.60/hour standard bot usage rate + $0.10/hour web_gpu$1.50/hour standard bot usage rate + $1.00/hour

Securing your webpage

You will likely not want your webpage accessible to any individual that opens the page. The standard approach to securing an Output Media webpage is embed a short-lived/pre-authenticated session token within the Output Media URL that your backend can use to authenticate and subsequently redirect.

FAQ

Why is the bot's audio / video output choppy? How can I improve streaming quality?

If the audio or video output from your bot is choppy, it's likely that your bot's instance doesn't have enough CPU power to handle your use case. You can test this by upgrading the bot to a larger, more powerful instance. Typically the web_4_core instance is sufficient for most Output Media use cases. To switch to 4 core bots, include this in your Create Bot request:

{

...

"variant": {

"zoom": "web_4_core",

"google_meet": "web_4_core",

"microsoft_teams": "web_4_core"

}

}What are the browser dimensions of the webpage?

1280x720px

Why doesn't the bot's video/screenshare show in the recording?

It currently isn't possible to include the recording of the bot in the final recording. That said, you can still include the bot's audio by setting the recording_config.include_bot_in_recording.audio = true

Can I use the Automatic Audio Output or Automatic Video Output parameters while using Output Media?

No. The Output Media cannot be used with automatic_video_output or automatic_audio_output parameters. These features are mutually exclusive.

Similarly, the Output Video and Output Audio endpoints can not be used if your bot is actively using the Output Media feature.

Will the bot's audio/video/transcript be included in the final recording?

You can configure the bot's audio to be included in the final recording by setting the recording_config.include_bot_in_recording.audio: true in the Create Bot request.

You cannot include the bots video or transcript in the final recording at this time. If you want to workaround this, we recommend:

- If you only need the transcript:

- Real-time - you will need to fetch it from your TTS provider (if applicable). You can then merge it by aligning the timestamps from the TTS provider's transcript with the generated transcript of the other participants

- Async - you will need to:

- Configure the bot to include the bot's audio in the final recording by setting the

recording_config.include_bot_in_recording.audiofield to true in the Create Bot request - In the async transcription tab, enable Perfect Diarization in the Async Transcription to transcribe the call.

- There are a few items to note:

- The meeting platform must support separate audio streams to enable perfect diarization

- The bot's name will return as

null. You can work around this by querying the Retrieve Bot api instead and getting thebot_nameyou originally set in the Create bot config

- Configure the bot to include the bot's audio in the final recording by setting the

- If you need the video: you will need to send another bot to the call to record the meeting. This bot will capture all participants, including the bot agent that is outputting video. This method can also transcribe as the bot agent is registered as its own participant

Can I make the bot only output audio for an audio-only agent?

No, it is not possible to output only audio into the meeting. The output media feature will always include the webpage as the bot's video. It is also not possible to turn off the camera while output media is on.

As a workaround, developers will use a placeholder image (e.g. your company logo/brand) or show a black screen instead.

Updated 2 days ago