Transcription FAQ

Frequently asked questions about transcription.

How do I match words with calendar participants or email addresses?

If you are using Recall’s calendar integration, you can receive emails in all API responses that contain participant data — you can read more about the feature at Meeting Participant Emails.

Currently, meeting platforms do not expose participant emails directly for privacy reasons. This means there isn’t a built-in way to perfectly match speakers from transcriptions to calendar participants. A common workaround is:

- Use the calendar integration to retrieve participant emails.

- Compare those emails to meeting participant names.

- Apply fuzzy matching between the transcript speaker names and the participant list to associate words in the transcript with the correct email addresses.

Why is the speaker "None" at the start of a transcript?

At the start of a call, someone may be already speaking prior to the bot joining. In this case, there is no active speaker event to tie to the first utterance in the transcript.

In this case, the first utterance will have a speaker of null.

How do I determine transcription hours for a given bot?

The recommended approach here is to check the transcript.data.provider_data_download_url field attached to a recording. This contains the raw data received back from the transcription provider, and should tell you the exact audio duration that was transcribed.

Exampleprovider_data_download_url :

{

"transcript": {

"id": "2c22e1b4-4477-4372-b080-9799a4c7bf5b",

"created_at": "2025-8-11T20:40:45.996425Z",

"status": {...},

"metadata": {...},

"data": {

"download_url": "...",

"provider_data_download_url: "...",

},

"diarization": null,

"provider": {

"assembly_ai_async": {}

}

}

}Fetching custom fields from your transcription provider

If you want to extract a specific field from your transcription provider that is not exposed through the Recall API, then you can download the raw provider data from the transcript artifact:

{

"id": "3fa85f64-5717-4562-b3fc-2c963f66afa6",

"data": {

"provider_data_download_url": "..."

}

}

Then download the data from data.provider_data_download_url extract the specific field you need. See the response format here.

How do I get the transcript formatted by sentences?

Recall.ai provides transcripts with word-level timestamps when using Recall.ai Transcription or a Third-Party Transcription. You can then convert this to a user-friendly transcript like a dialogue transcript.

You can fetch the transcript on the recording via the recording.media_shortcuts.transcript.data.download_url. You can then pass the transcript JSON to the following function to get the dialogue/caption transcript:

function parseTranscript(transcript) {

if (!Array.isArray(transcript)) return [];

// coalesce adjacent entries by same participant (mutates 'words' of the kept entry)

const keepers = [];

for (const entry of transcript) {

const participant = entry?.participant;

const words = Array.isArray(entry?.words) ? entry.words : [];

if (!participant?.name || words.length === 0) continue; // skip invalid rows

const key = participant.id ?? participant.name;

const last = keepers[keepers.length - 1];

const lastKey = last && (last.participant.id ?? last.participant.name);

if (last && key === lastKey) {

// Append words to previous entry (keeps all other top-level properties intact).

last.words.push(...words);

} else {

// Keep original object to avoid dropping unknown properties.

keepers.push(entry);

}

}

// Map merged entries to paragraphs with timestamps + duration

return keepers

.map(({ participant, words }) => {

const paragraph = words.map(w => w.text).join(" ").trim();

if (!paragraph) return null;

// First word with a start; last word with an end (words assumed chronological).

const first = words.find(w => w?.start_timestamp);

const last = [...words].reverse().find(w => w?.end_timestamp);

const startRel = first?.start_timestamp?.relative ?? null;

const startAbs = first?.start_timestamp?.absolute ?? null;

const endRel = last?.end_timestamp?.relative ?? null;

const endAbs = last?.end_timestamp?.absolute ?? null;

// Duration: prefer relative seconds; else compute from ISO absolute timestamps.

const duration_seconds =

startRel != null && endRel != null

? endRel - startRel

: (startAbs && endAbs ? (Date.parse(endAbs) - Date.parse(startAbs)) / 1000 : null);

return {

speaker: participant.name,

paragraph,

start_timestamp: { relative: startRel, absolute: startAbs },

end_timestamp: { relative: endRel, absolute: endAbs },

duration_seconds,

};

})

.filter(Boolean);

}

This will return something like the following result:

[

{

"speaker": "Gerry Saporito",

"paragraph": "Hey Jake, how's it going?",

"start_timestamp": {

"relative": 187.37506,

"absolute": "2025-10-14T14:38:09.845Z"

},

"end_timestamp": {

"relative": 190.88506,

"absolute": "2025-10-14T14:38:13.355Z"

},

"duration_seconds": 3.5100000000000193

},

{

"speaker": "Jake Miyazaki",

"paragraph": "Hey Gerry, doing well? Have you tried Recall.ai transcription yet? It works very well",

"start_timestamp": {

"relative": 250.60507,

"absolute": "2025-10-14T14:39:13.075Z"

},

"end_timestamp": {

"relative": 275.91504,

"absolute": "2025-10-14T14:39:38.385Z"

},

"duration_seconds": 25.309969999999964

},

...

]Who is participant 2147483647?

When using output audio with "include_bot_in_recording": {"audio": true} and perfect diarization the bot will transcribe the audio that it's outputting into the meeting. In this situation, the audio will be assigned to the participant ID 2147483647 which is 2^31 - 1

How many transcripts can I generate for a recording?

A given recording can have up to 10 succesful transcripts at one time, up to 100 attempted. If you try to create new transcripts beyond these limits, then the API will return a 400 and you will need to delete existing transcripts before creating a new one. This is to protect developers form unexpected/accidental runaway transcription usage

Can I bring my own recordings (audio/video files) to transcribe?

You can't upload your own recordings (e.g. .mp3, .mp4, .wav) to transcribe at this time.

Instead, you can try transcribing the audio using a third party transcription provider's integration

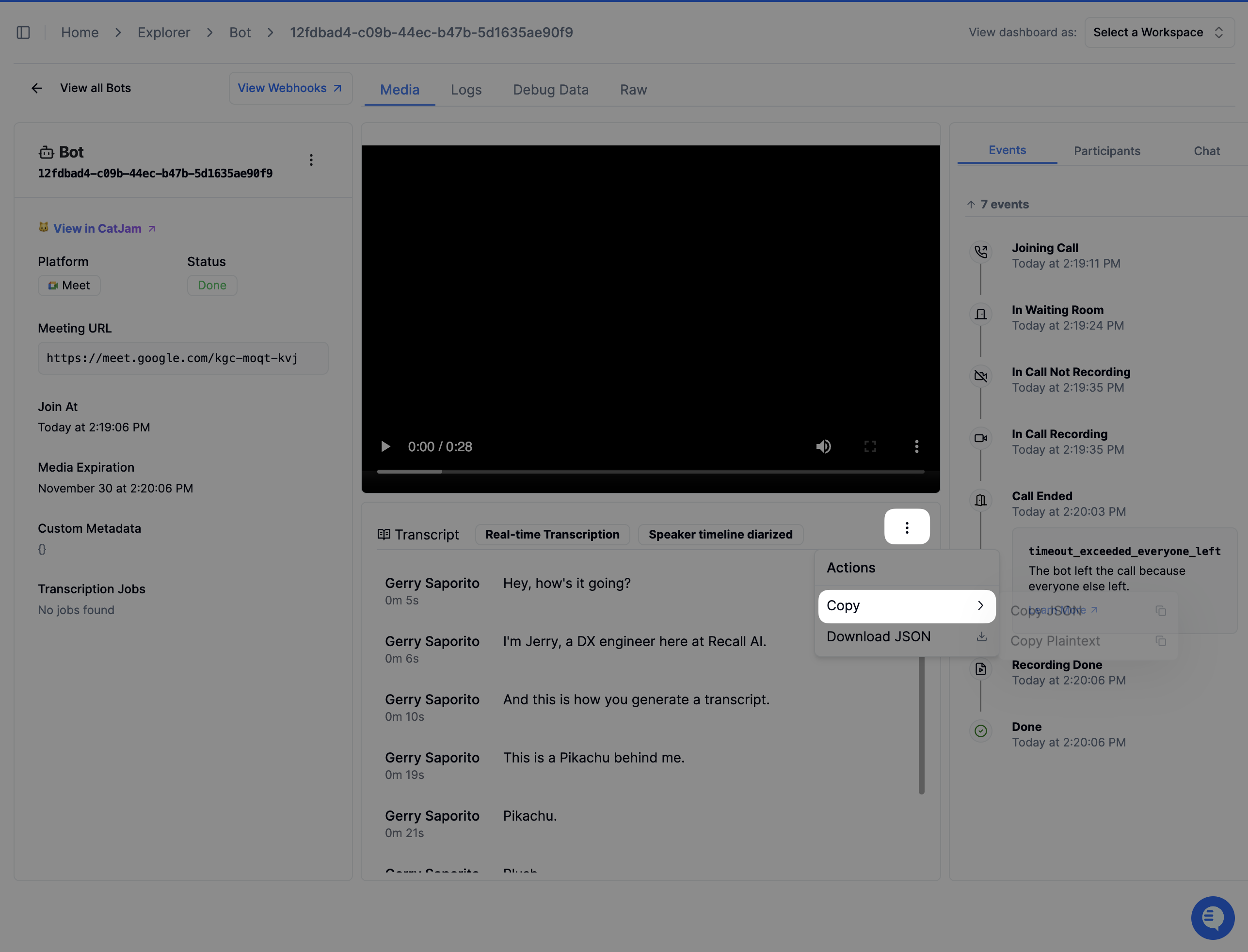

How can I download the transcript from the dashboard?

You can do this in the dashboard under the transcript component by clicking the 3 dots > Copy > Copy Plaintext

You can also programatically generate this transcript yourself by getting the dialogue transcript

Can I upload content recorded outside of Recall.ai to be transcribed?

Recall.ai can only transcribe recordings that were generated via bots or the Desktop Recording SDK. Currently, you cannot upload media recorded outside of these sources to be transcribed.

Will I be charged if media is captured but it contains no speech (e.g., silence)?

Yes. If a recording is captured and processed for transcription but it does not contain audible speech, it can be billed even if there’s no speech (e.g., 20 minutes of silence). The key difference is no media captured vs media captured (even silent).

Why are real-time transcription webhooks come as single words instead of full sentences?

Real-time transcription webhooks often come as single words rather than full sentences because real-time transcription is streaming results as soon as it can (often word-by-word) to minimize latency.

How to re-transcribe recordings?

For bots, you can use this script to re-transcribe recordings.

Updated 11 days ago